Designing vehicles with the power of diffusion models

How we created a self-hosted, AI-powered platform for vehicle designers.

Project background

Today, AI image generation offers incredible potential but often lacks the focus needed for professional workflows. Many tools are general-purpose, serving everything from fantasy art to advertising campaigns. For our long-term client, we addressed this issue directly — over the course of a year, we built an internal-use, AI-aided design platform specifically tailored for vehicle designers.

The client's employees can create, edit, and export images. The platform also allows the training of mini-models based on users’ sketches, along with creating and sharing custom presets, gallery creation and curation, copyright checks, and more.

Initially, our goals centered on UX flows and front-end web component development. As the project evolved, we expanded to full-stack web development and the extension of core image creation functionalities.

Components and features

The core of the platform is a web application, hosted internally by the client for compliance reasons. In the web application, we’ve designed and built a series of mini-tools, which users can use to focus on one specific part of the workflow at a time.

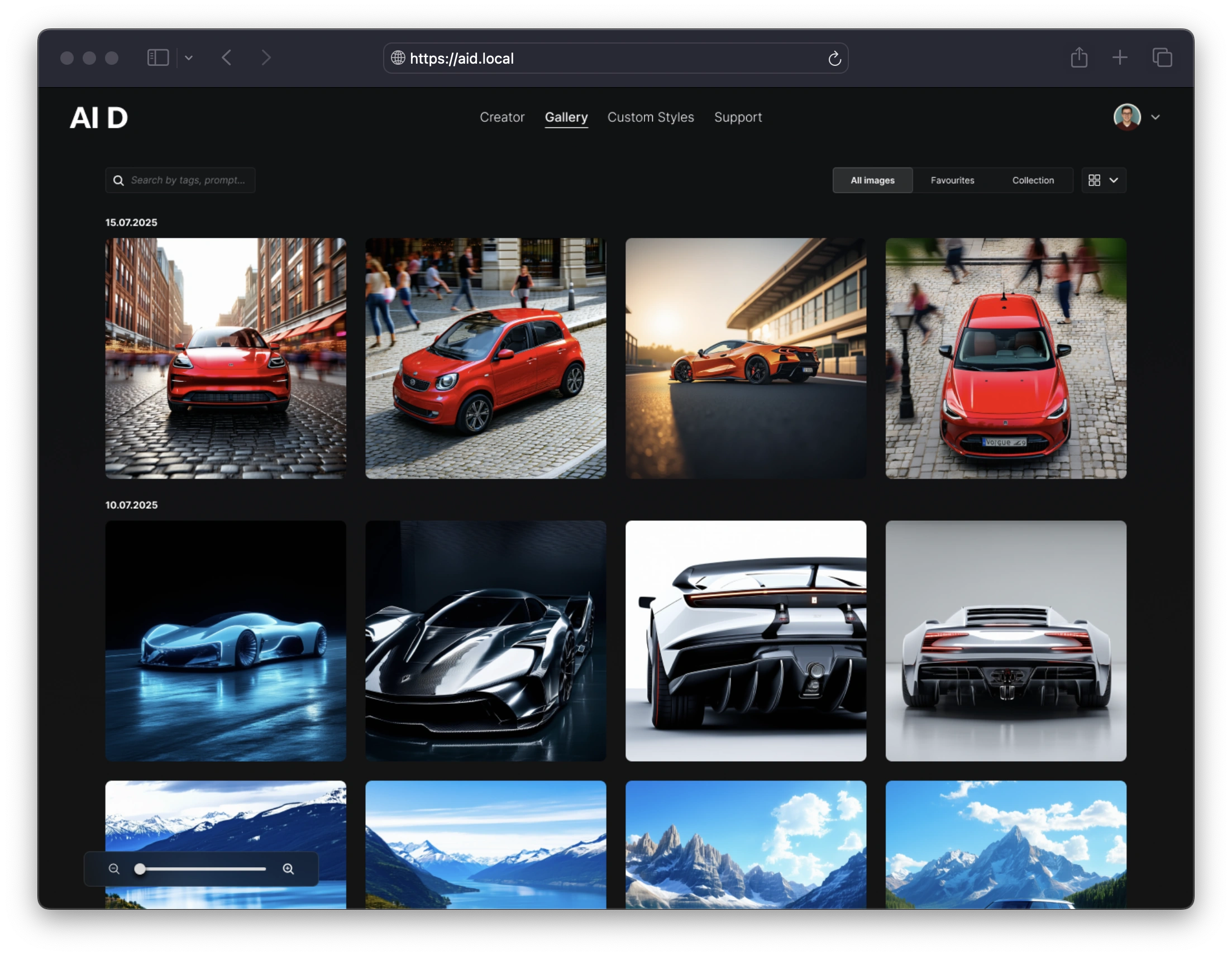

For example, here is the gallery prepared for the presentation. Users are their own curators — they are able to tag images with arbitrary keywords, create collections, use search and filtering, upscale images, and freely convert them between multiple resolutions and formats.

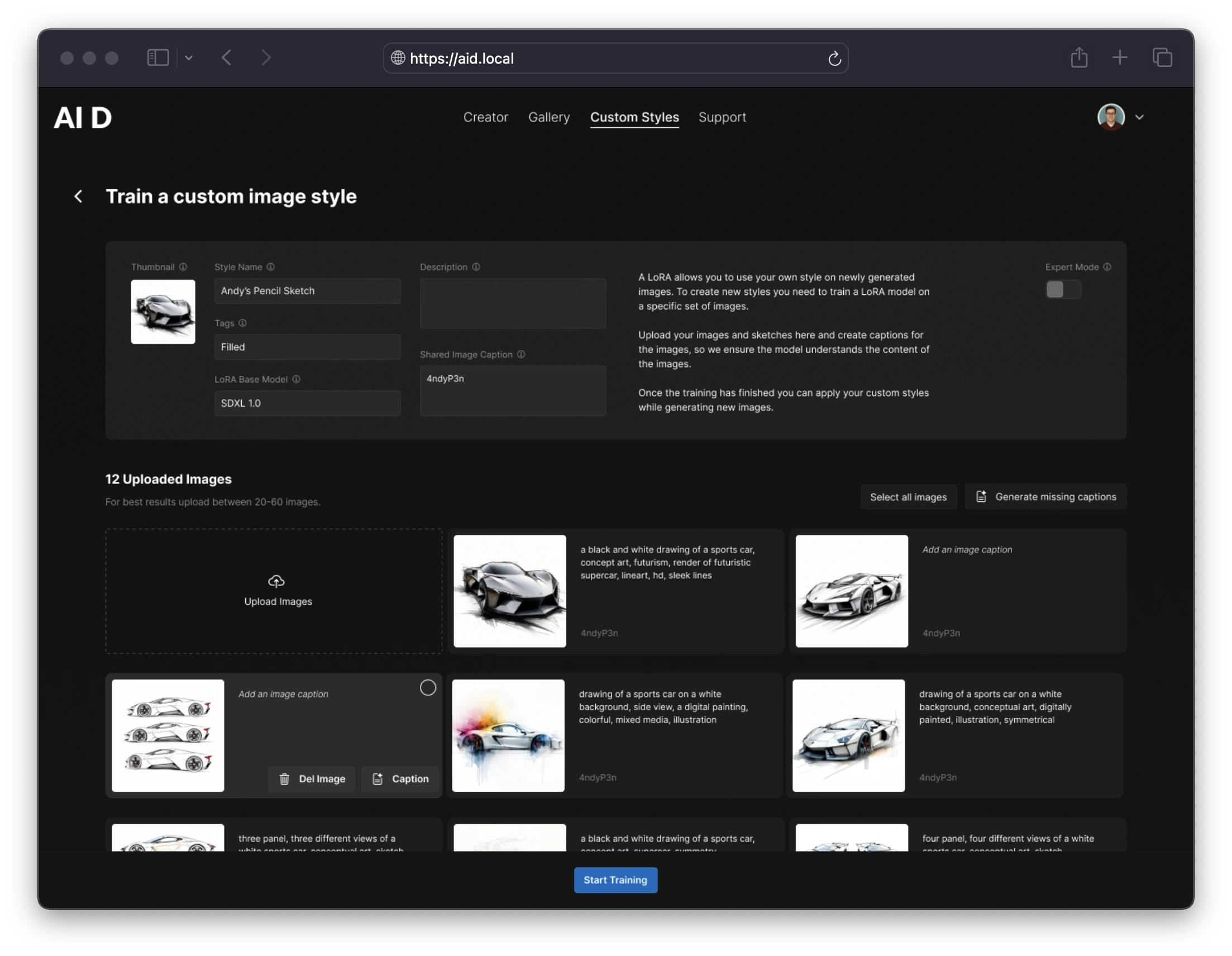

One particularly powerful feature of the platform is the ability to train mini-models on employee sketches. Once trained, the designers can diffuse images in their own particular style.

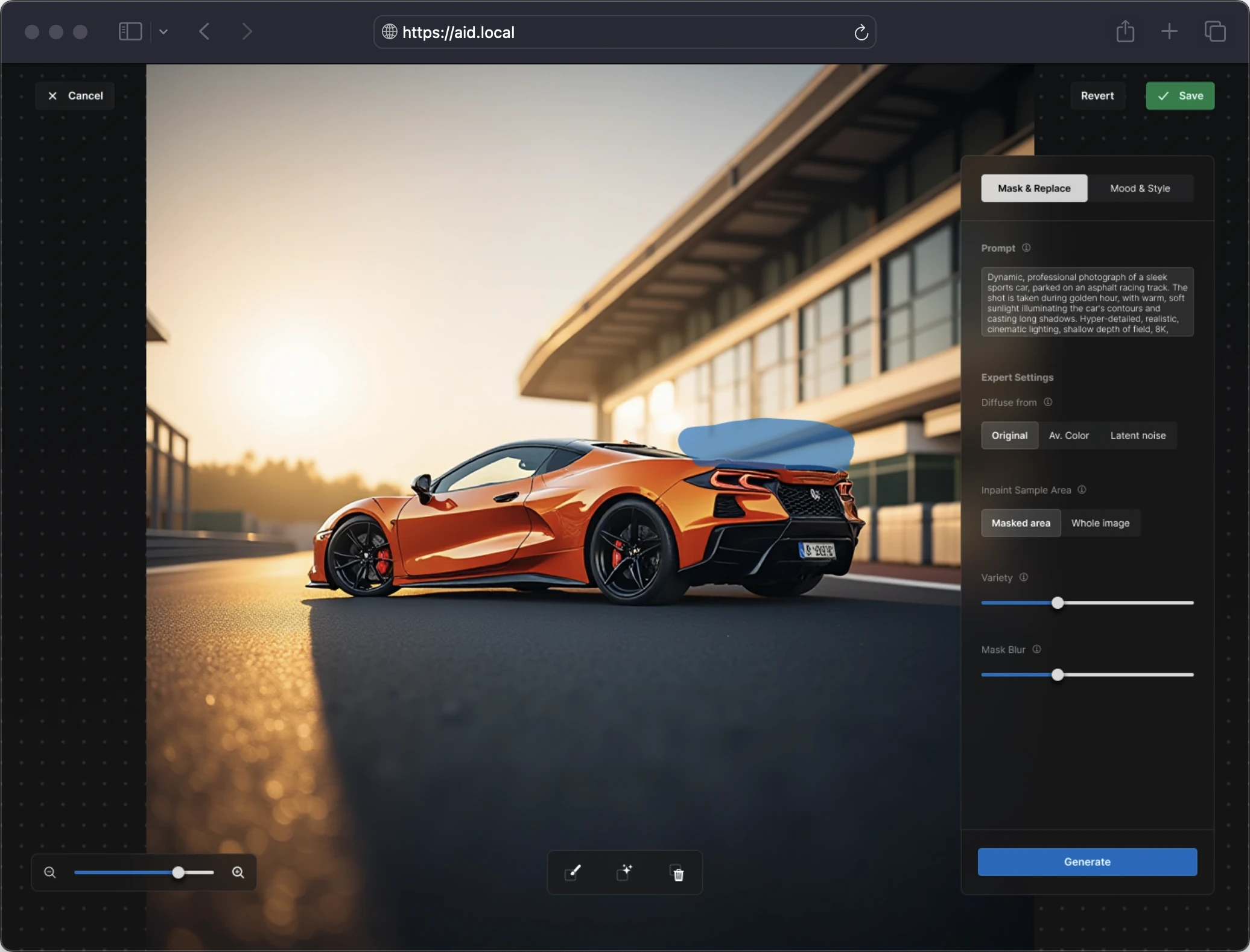

Mask and replace (also known as inpainting) is used by designers to isolate and regenerate parts of an image. This is a perfect tool to try out things quickly — for example to jump between different headlight variations or different rims.

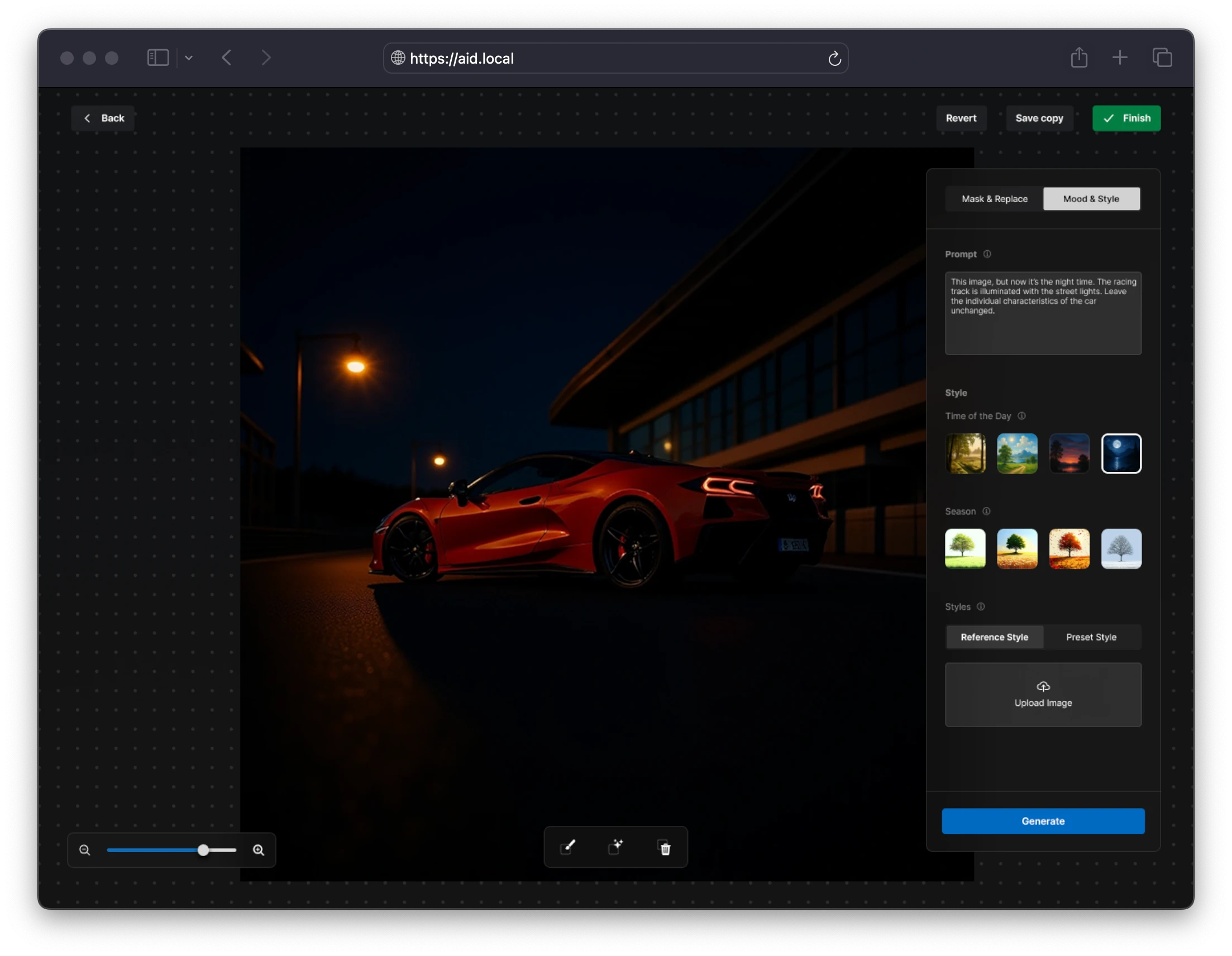

Contextual editing allows quick and precise edits — changing the time of day in the image, weather conditions, background, colors, and materials of the subject. This can all be done by describing the edit, providing references, or directly via presets in the interface.

Intuitive and robust

From the start, we prioritized an intuitive and consistent user experience. We deliberately focused on adding only sensible features to avoid interface clutter. Instead of reinventing powerful image-editing software in the browser, we opted for seamless copy-and-paste functionality between edit mode in our AI platform and other software.

The platform's backend is also sophisticated and well-thought-out. A modular architecture prevented dependency lock-in on any single diffusion backend or model. This allowed us to keep up with the latest models and features as they were released during development.

The end result

We conducted over a dozen user interviews, developed countless prototypes, tested them, created our own design system. Before the release we also ensured that all software components adhered to appropriate licenses, providing the client with peace of mind regarding compliance. The platform successfully went live for the internal use on client’s infrastructure, with more than 100 users having access to it.

Some of the technologies that we’ve used for this project:

- Figma for wireframing and prototyping

- Our own design system with open-sourced icons and carefully designed color palettes

- Svelte 5 for high-fidelity prototypes and final web-development

- Mix of SvelteKit and Node with Express for the backend duties. Integration with custom (already existing) AWS DevOps pipeline.

- Both A1111 and ComfyUI as the diffusion middleware (both not visible to the end user)

Want something similar?

We create custom workflows to address your challenges specifically. Get in touch, and let us help you.